A residual current detector (RCD) or residual current circuit breaker (RCCB), detects leakage currents when an alternate path to ground is established. RCDs isolate the power source from the leakage path by tripping open the circuit. Unlike fuses, these types of circuit breakers can be reset and reused. They play an important role in protecting people and equipment.

In this post, I will review the requirements for leakage current detection and tripping for RCDs and how ultra-low power voltage supervisors or Reset IC can be used as a leakage current detector. I will also explain how voltage supervisors benefits medium-voltage breakers, such as air-circuit-breaker (ACB) and molded-case-circuit-breakers (MCCB) that use a microcontroller.

Using a voltage supervisor as a leakage current threshold detector

Leakage currents occur when an alternate path is established by a malfunctioning device or by a person unintentionally touching the line, creating an alternate path to ground. Since long exposures to high leakage currents result in bodily harm, RCDs are designed to respond to currents as low as 5 mA, all the way up to 500 mA. As described in the International Electrotechnical Commission TS60479-1 standard, humans exposed to 50 mA for 200 ms will have muscle contraction; exposures longer than 3 s will increase the probability of ventricular fibrillation.

An RCD detects leakage current by sensing the difference between the active line and neutral current. If the line current and the neutral current are not balanced and the leakage current exceeds a pre-determined threshold, the circuit breaker trips, interrupting and isolating the power source. RCD equipment can be broken into three main stages. First is the sensing stage during which a leakage sensor senses the leakage current. In the second stage, the detect circuit sets the leakage current threshold. In the final third stage, a solenoid relay trips open a switch to isolate the leakage from the source.

In the past, electromechanical devices were used as the detect circuit to set the leakage current threshold. Modern RCDs use integrated circuits such as a voltage supervisor to improve the accuracy and response time for detecting leakage current and driving a solenoid relay.

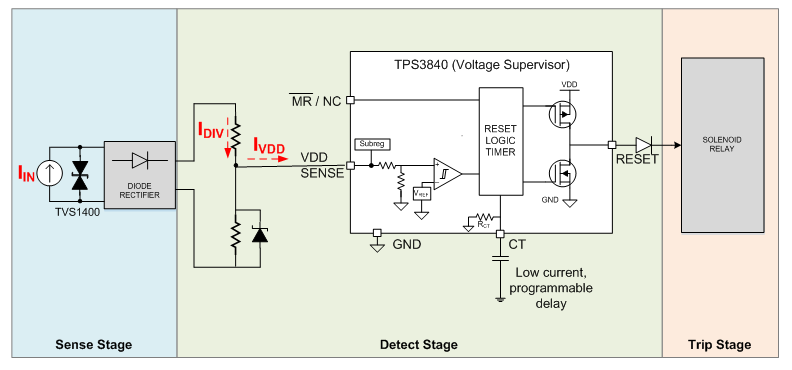

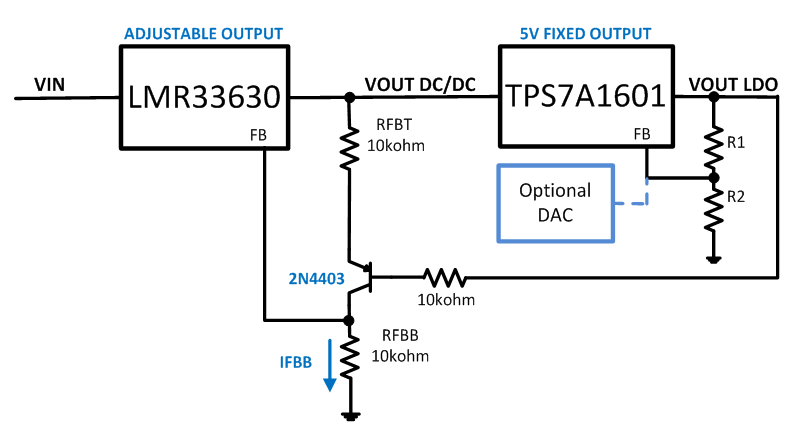

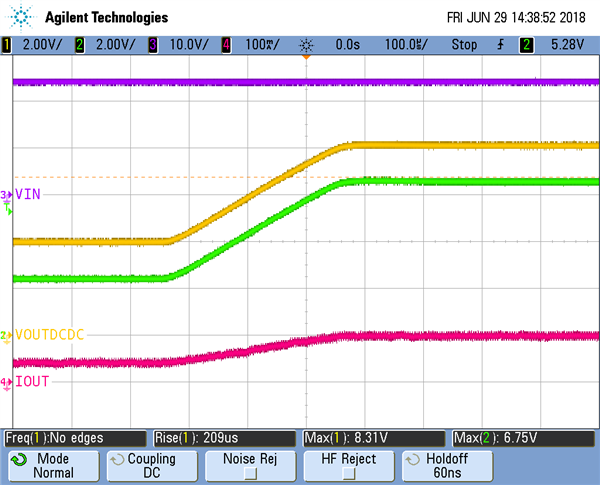

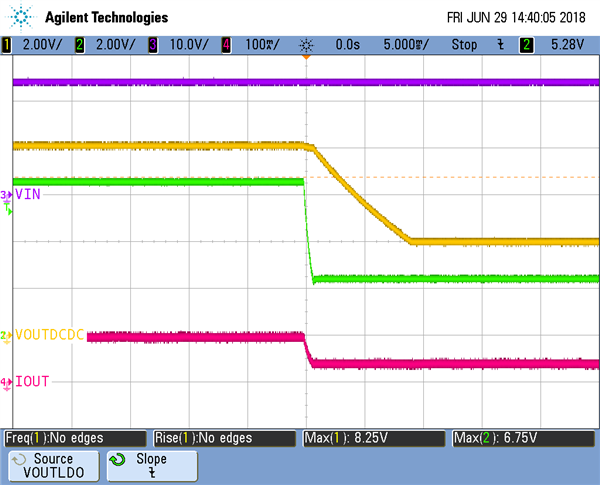

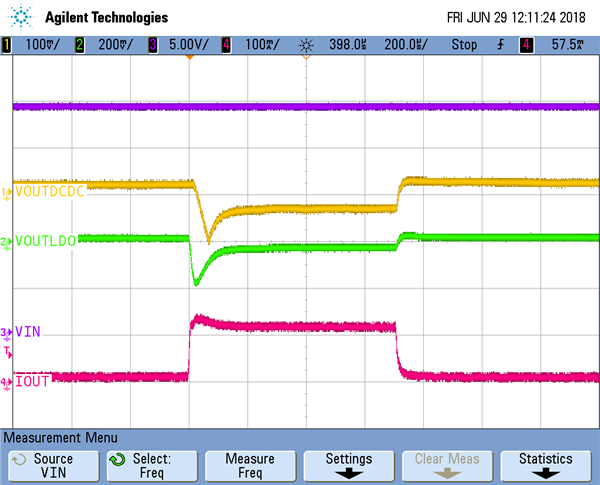

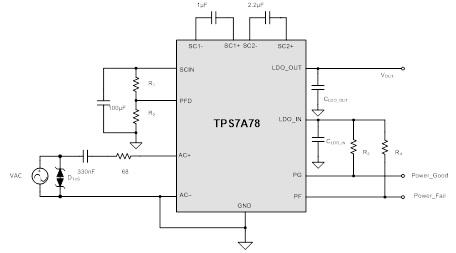

Figure 1 shows how TI’s TPS3840 voltage supervisor can detect leakage current. In this example, the leakage sensor such as a differential current transformer or a zero phase current transformer is represented by a current source. For the current detect circuit a resistor divider is used to convert the input current to a voltage, which is then detected by the TPS3840. The TPS3840 integrates a precise reference voltage band gap and voltage comparator. The trigger point is accurately programmed at the factory by one-time nonvolatile memory (OTP), and the voltage threshold can be set from 1.6 V to 4.9 V within 1% typical accuracy.

When the voltage at the VDD pin rises above the threshold, the RESET pin pulls high to interrupt a microcontroller or drive a solenoid relay. In addition, RESET response time can be extended with a single external capacitor to meet varying RCD response times based on the magnitude of the leakage current.

Figure 1: A voltage supervisor used as a leakage current detector in the Nano power, wide VIN (12-V max) supervisor reference design used as a comparator or power sequencer

The voltage supervisor in this reference design meets three key specifications:

Fast power-up and programmable response times. When a voltage supervisor ramps up from a zero input voltage to above the trip point or threshold voltage, it takes a certain amount of time for it to start and react. Circuit breakers need to quickly detect the leakage current level and have flexibility in configuring the response time to trip based on leakage level and duration to avoid nuisance tripping caused by transients. With a startup time of 200 µs, the TPS3840 can quickly respond.

Ultra-low input current. In Figure 1, the power pin for the supervisor IC (VDD) is same as the input signal monitoring pin (SENSE). Since it is powered from the input signal, the voltage supervisor should have high input impedance to minimize the error (IIN) on the voltage divider (IDIV). The TPS3840 voltage supervisor consumes ultra-low current and has a typical IQ of 350 nA,

Low VPOR for low VOL. VPOR is the minimum input voltage required for a controlled output state. When VIN< VPOR, the output tracks the input and may trigger the relay. VPOR should be as low as possible to provide a margin between the relay’s enable voltage level and voltage supervisor low output voltage level (VOL). The TPS3840 push-pull active-low version has both low VPOR and a VOL of 300 mV to avoid a false reset.

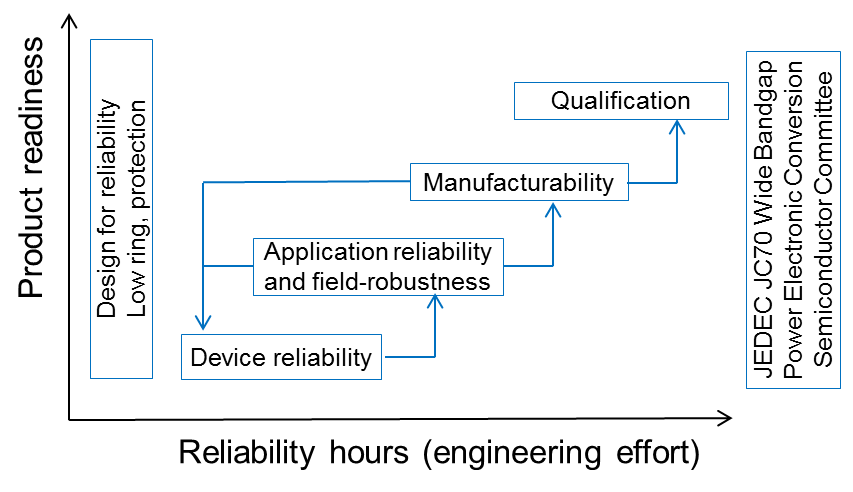

Using a voltage supervisor as a microcontroller supply voltage monitoring

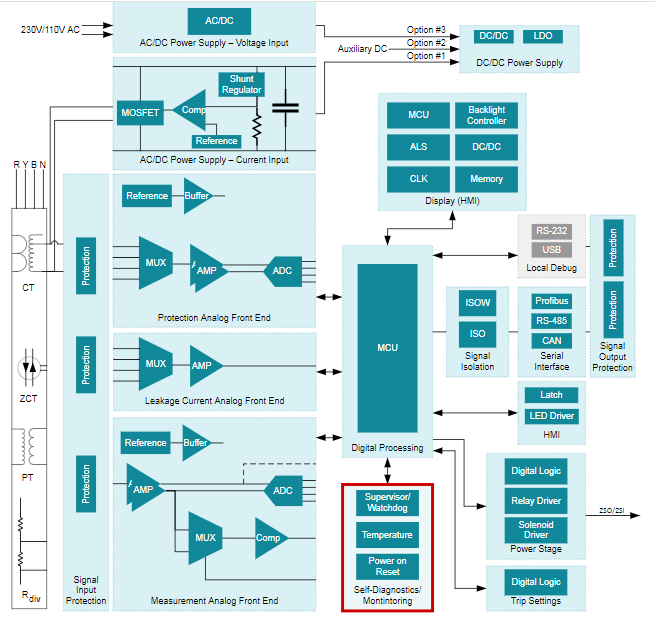

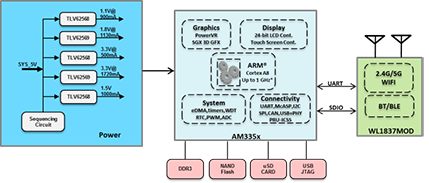

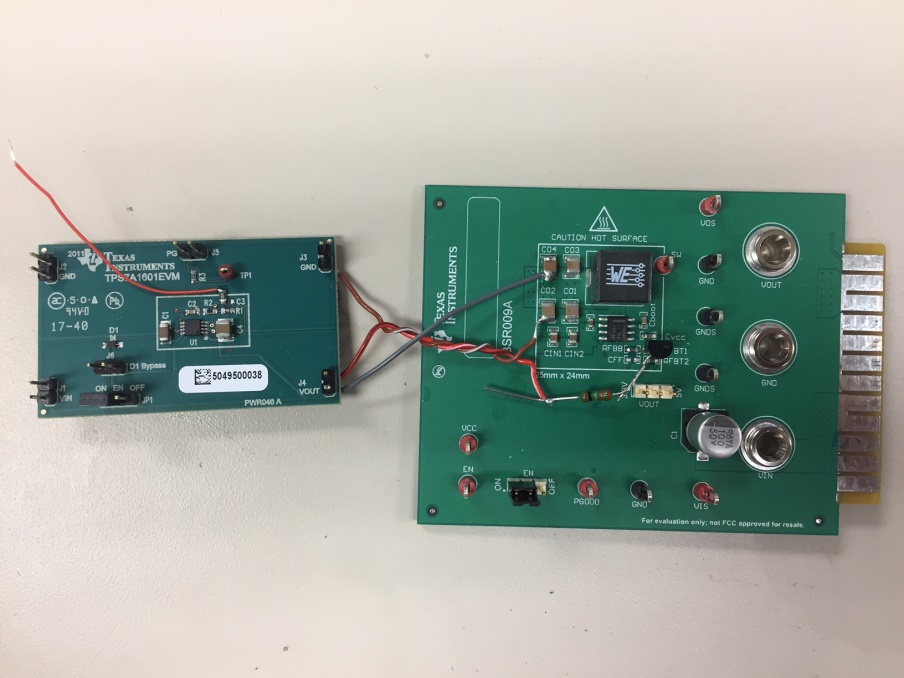

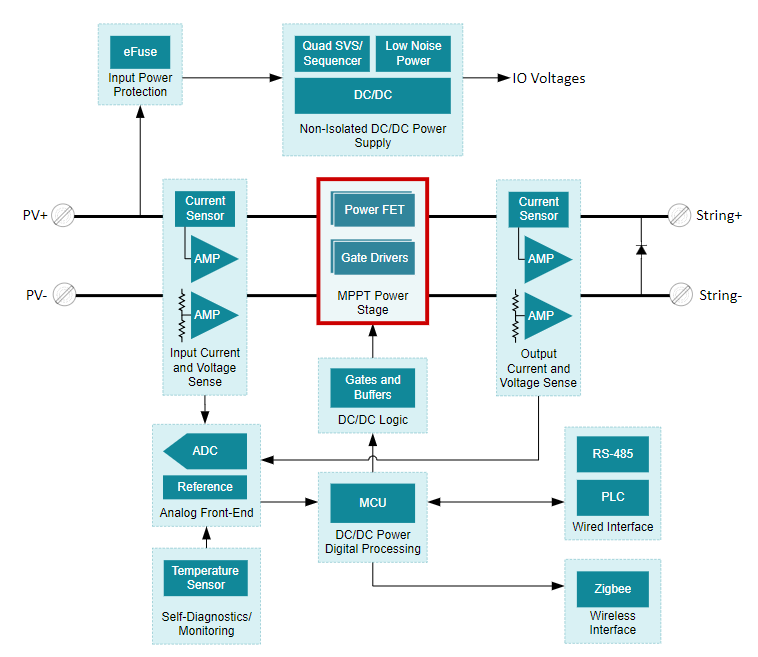

Figure 2 shows a block diagram of ACB and MCCB reference design, these circuit breakers require microcontrollers to process captured data to detect overcurrent and ground current faults. A low IQ voltage supervisor such as the LM8364 or TPS3840 can monitor the power rails. The TPS3840 has a wider operating temperature range and lower IQ than LM8364.

If the voltage supervisor is integrated in the microcontroller then an external watchdog timer is recommended. An external watchdog helps ensure that the microcontroller does not latch by periodically detecting pulses sent by the microcontroller’s general-purpose input/output pin. If the software glitches and a pulse is missed, the external watchdog timer will reset the microcontroller.

The TPS3430 programmable watchdog timer is a good option, since it offers programmable watchdog timeout and reset time delay to meet the timing requirements of any microcontroller. If enhanced reliability is required then both voltage supervisor and watchdog should be implemented, the TPS3823 integrated watchdog and voltage supervisor is a good alternative, offering fixed threshold and watchdog timeout options.

Figure 2: Signal Processing Subsystem and Current Input Based Self Power for Breaker Applications (ACB/MCCB) reference design block diagram

Voltage supervisors can help enhance your circuit breaker designs by not only monitoring the microcontroller’s voltage supply to ensure normal operation, but also serving as a leakage current detector.

Additional resources

To find the best voltage supervisors for your system, check out the “Voltage Supervisors (Reset ICs) Quick Reference Guide.”

Read the sixth question in the “Voltage Supervisors (Reset ICs): Frequently Asked Questions” application report for more info on the total IDD of a system.