TI is committed to continually improving the online design experience. On July 5, we will introduce our new fully redesigned HTML5 application for WEBENCH® Power Designer. In this post, I will walk you through the new enhancements, designed to help you make power design decisions faster and easier.

The input form

The first thing you’ll notice is our new re-designed input form displayed in Figure 1 below. You can use this form to quickly look up a TI device that you may have in mind or start your search using basic inputs. The advanced settings are now organized to guide you toward designs meeting any criteria, and the optimization knob is now a Design Consideration toggle

.

Figure 1: Re-designed input form

Select a design screen

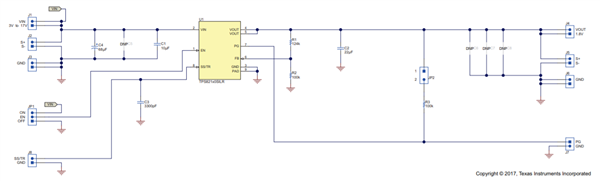

The first step in the power design process is to select your design. WEBENCH Power Designer previously calculated operating values and generated a thumbnail of what the schematic may look like. New optimized algorithms now enable you to generate full power designs. Our large selection of filters lets you narrow down which design would best fit your needs. For users who are used to the original flash version, there’s still a table view option. However, a new card view, pictured below in Figure 2, is the default view in the selection step.

This card view has additional features that enable you to:

- View and download actual design schematics, bill of materials and operating charts.

- Click to compare multiple designs side by side.

- Link directly to more information and make a purchase.

- When logged in, the ability to share designs and print a WEBENCH PDF design report.

Figure 2: Select screen card view

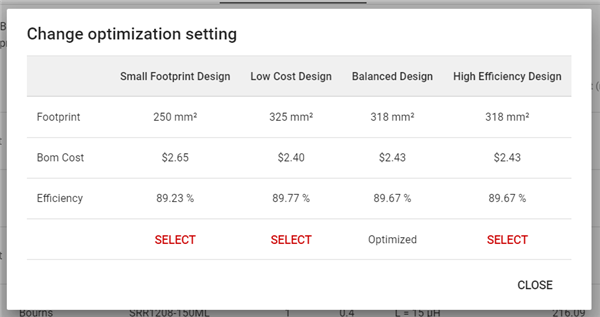

The compare designs feature

Figure 2 above shows the new selection screen with check boxes on each design to compare designs. This new feature generates a table, displayed in Figure 3, with additional information such as integrated circuit (IC) parameters and IC features which enables a side-by-side comparison of multiple designs.

Figure 3: Select screen compare popup

New layout

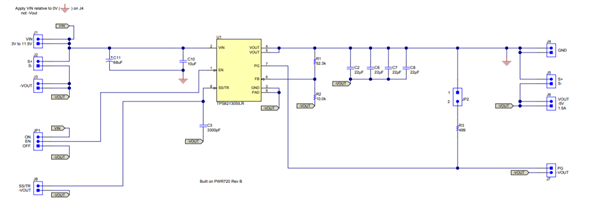

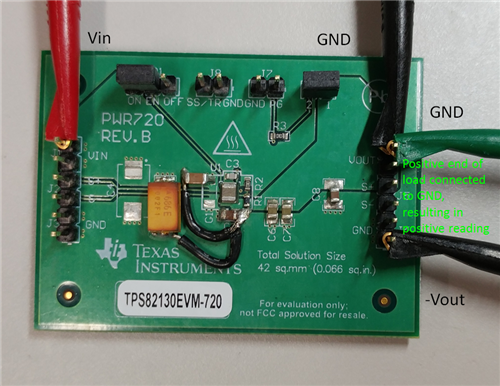

The customize, simulate and export design steps from our former version of WEBENCH Power Designer have been split from a single screen into three new screens, with logical steps to guide you through the power design flow.

Figure 4 below is a screen shot of the new Customize screen. You will notice that you can view your design upfront, customize parameters to the left, and see the effects of your customizations in operating and performance below. You will also see in Figure 5 that we have removed the optimization knob. The removal of the optimization knob simplifies the process by calculating the design values upfront for comparison purposes so that you can make the best decision to meet your optimization needs.

Figure 4: Customize screen

Figure 5: Optimize your design

Once you are done customizing, you can verify your design by running an electrical simulation in the next screen. Finally, you can move on to the export screen which displays an overview of your final design with clear buttons to prompt you to export to your most used CAD tools, print a design PDF report, or circle back to TI.com for more information such as downloading a datasheet, going to the TI Store, or exploring the product folder.

Mark your calendars for our July 5 launch date and subscribe to TI’s Power House blog community for further content on how to best navigate our new interface.